eSeGeCe

software

eSeGeCe

software

HTTP2 Latency

In a previous post, I show a comparison between HTTP2 and HTTP1 performance using a single connection (see HTTP2 vs HTTP1 performance). In this post I will show a comparative between HTTP2 and HTTP1 introducing a latency and showing how latency can affect to HTTP protocol requests.

When a client do a request, there is a latency, more or less depending of net conditions (traffic, server distance...), latency affects a lot to HTTP 1.1 protocol, because if you must send a bunch of requests, you must send first the request, await response from server, do next request and so on... of course you can use more than 1 connection, but this requires more resources from server and doesn't scales well.

HTTP2 protocol, uses a single connection to make all requests, so latency affects less, you can send multiple requests without waiting to be processed, so the process is much faster.

HTTP2 Latency Test

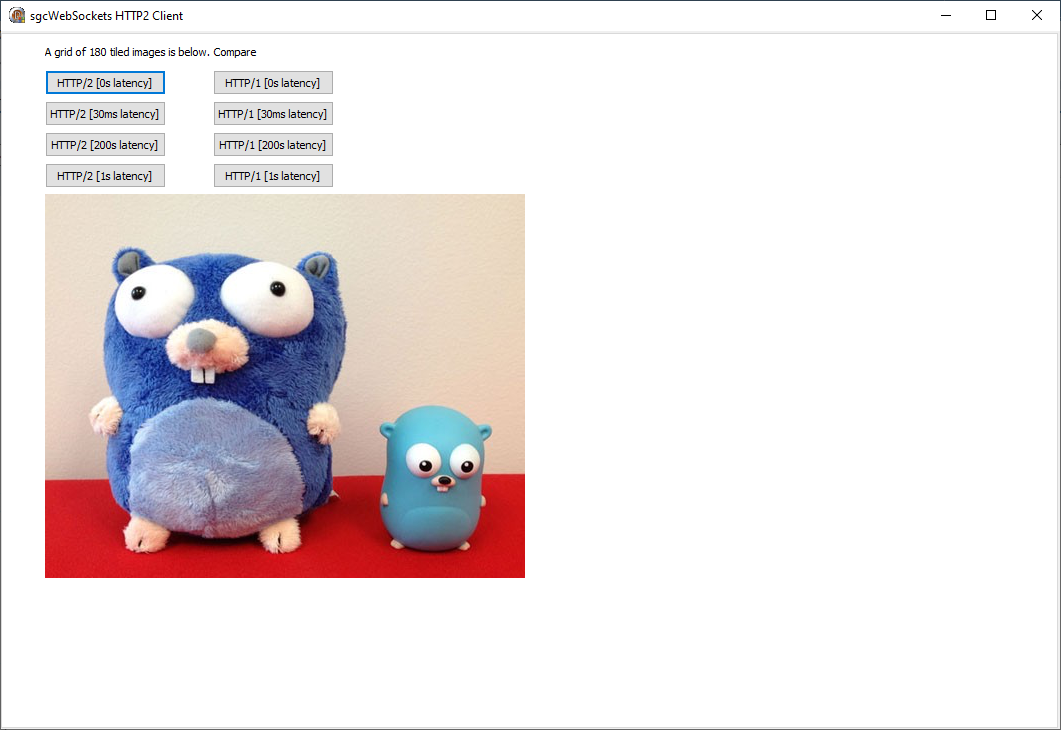

I use the free HTTP2 Golang test server to show how latency affects to HTTP/2 vs HTTP1.

Using HTTP2 protocol, you only must send all requests to server and process the responses asynchronously. The process is very fast and takes less than 1 second.

HTTP1 protocol instead, the requests are sent one by one and before send another request, must wait a response from server, so the process is slow.

You can download an already compiled sgcWebSockets demo sample for windows from the below link. Just test the different options and compare the performance between both protocols.

When you subscribe to the blog, we will send you an e-mail when there are new updates on the site so you wouldn't miss them.